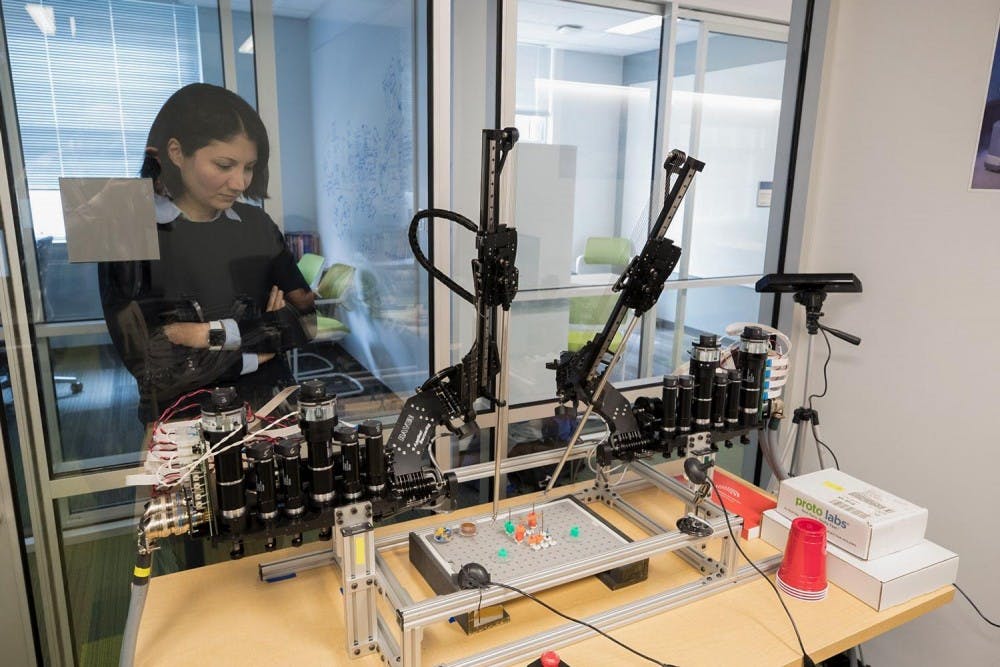

Homa Alemzadeh, an assistant professor at the Engineering School, is working with a team of surgeons, engineers and graduate students to make surgical robots smarter, or more able to anticipate human user error.

Robot-assisted surgery was approved by the Food and Drug Administration in 2000 and has since become a widespread tool in hospitals across the country. Guided by the use of a high-definition, three-dimensional stereoscopic view of the surgical field that enables accurate depth perception, a surgeon can use the robot to perform minimally invasive, precise surgeries.

According to a 2016 paper published in the scientific journal PLOS ONE by Alemzadeh and colleagues, robots can enable more reliable surgeries, but they can also cause adverse events that, while rare, can be devastating or fatal. Alemzadeh’s goal is to teach both the robot and the surgeon to anticipate and mitigate these adverse events before they happen, making surgery safer.

Alemzadeh first became interested in testing the safety of medical devices during her doctorate program at the University of Illinois at Urbana-Champaign. While designing hardware for a reliable heart rate monitoring device, Alemzadeh’s advisor asked if she had seen any data relating to the failures those devices might have.

“I went on Google a little bit, and I found that there is this database from the FDA ... that people would actually report their experiences with medical devices, like when they see a failure or any issues while they're using it with the patient to the FDA,” Alemzadeh said. “They actually are mandated by FDA to go and report it.”

However, she discovered that while device failure data existed in an FDA database, it was not being fully utilized to improve the safety of those devices. Alemzadeh said this was a turning point in her graduate research, helping define her current work studying the causes and frequencies of surgical robot failures.

Since arriving at the University in January 2017, Alemzadeh’s work has focused on integrating human and robot data to make surgical robots more resilient against user-induced errors and less likely to cause harm to human patients.

One way to improve the safety and resiliency of surgical robots is what Alemzadeh calls “context-aware monitoring.” This method programs the robot with a greater sense of awareness of the tasks it is performing and builds in safety checks that are specific to that surgical context.

Samin Yasar, a graduate student who works under Alemzadeh, said context-awareness begins with breaking down a surgical task into individual subtasks. Suturing, for example, involves grabbing a needle, moving to the tissue, pushing the needle through the tissue with one hand and then pulling it through with the other hand. Each of these individual tasks has a unique kinematic signature — a unique pattern of movement defined by its speed, direction and acceleration. This kinematic data can be fed back into the system to teach the robot what task it is performing, providing it greater awareness.

“If [a] computer can actually reason about which part [of the surgery] we are in, then we can apply some kind of safety checks on top of that,” Alemzadeh said.

Part of Yasar’s work involves defining unsafe actions for each surgical task — such as inserting a needle too deep in the tissue while suturing — which will then be incorporated into the software as warnings to the robot’s operator.

“We try to detect the context and given the context we try to find out what are the safe and unsafe actions. If someone is executing unsafe actions, we at least try to warn them,” Yasar said.

Noah Schenkman — a urologist at the University Hospital who has been working with surgical robots since 2002 — said that while the technology of the robot has improved dramatically over the years, there are still problems regarding safety.

Schenkman said surgeon error remains the greatest source of adverse events during surgery. Improving user training and preparation can help prevent these kinds of events from occurring. Although training simulation systems exist for the robot, they are limited in their ability to adequately prepare surgeons for the challenges they might face in the operating theater.

According to Alemzadeh and Schenkman, existing simulation systems provide only technical training of how to physically manipulate the robot and are lacking in their ability to hone critical decision-making skills. They don’t model the kinds of real adverse effects that can happen during surgery, such as unexpected bleeding or accidental movement of the robot arms.

Additionally, Alemzadeh said trainees encounter the same situation every time they practice on the simulation model, meaning that while they may be able to “beat the level” like a video game, they are not being prepared for difficult surgical scenarios.

To address this, Schenkman and his colleagues in the Health System — Leigh Cantrell of the Obstetrics and Gynecology Department and José Oberholzer of Surgery Department — provide Alemzadeh with examples of different clinical scenarios that the simulation system should model.

Their goal is to create dynamic and realistic training modules that mimic real adverse surgical events. These modules will provide new scenarios every time and increase in difficulty so that more experienced trainees can continue to learn and be challenged.

Schenkman said the new modules would help trainees practice getting out of trouble during rare adverse surgical events.

“You have to be exposed to that in a simulated atmosphere so that when it happens in real life, you're able to cope with situation,” he said.

Some of Schenkman’s resident physicians will be part of a pilot study this summer to test whether exposure to adverse events in Alemzadeh’s simulation system better prepares surgeons-in-training for actual surgery than the existing simulation technologies.